Published:December 26, 2025

Reading Time:1 min read

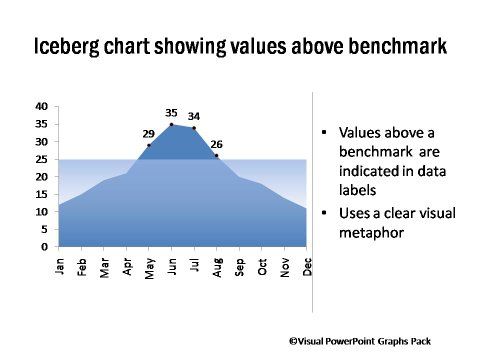

The new IceBerg benchmark reveals a critical gap: top vector search algorithms like HNSW don’t always perform best in real-world AI tasks (e.g., RAG). It calls for a shift in evaluation focus from speed/recall to actual application performance.

Vector retrieval expert Fu Cong, together with a research team from Zhejiang University, has introduced IceBerg, a new benchmark designed to expose significant gaps between standard vector retrieval evaluations and real downstream task performance.

The study highlights that in applications such as RAG, developers often default to algorithms like HNSW. However, when tested against real semantic tasks, HNSW is not consistently the optimal choice.

IceBerg provides a multimodal benchmark and an automated algorithm selection framework to help developers choose suitable retrieval algorithms. The team argues that research must focus more on real downstream performance.

Source:liangziwei